Delving into ONNX: Comprehending Computation Graphs and Structure

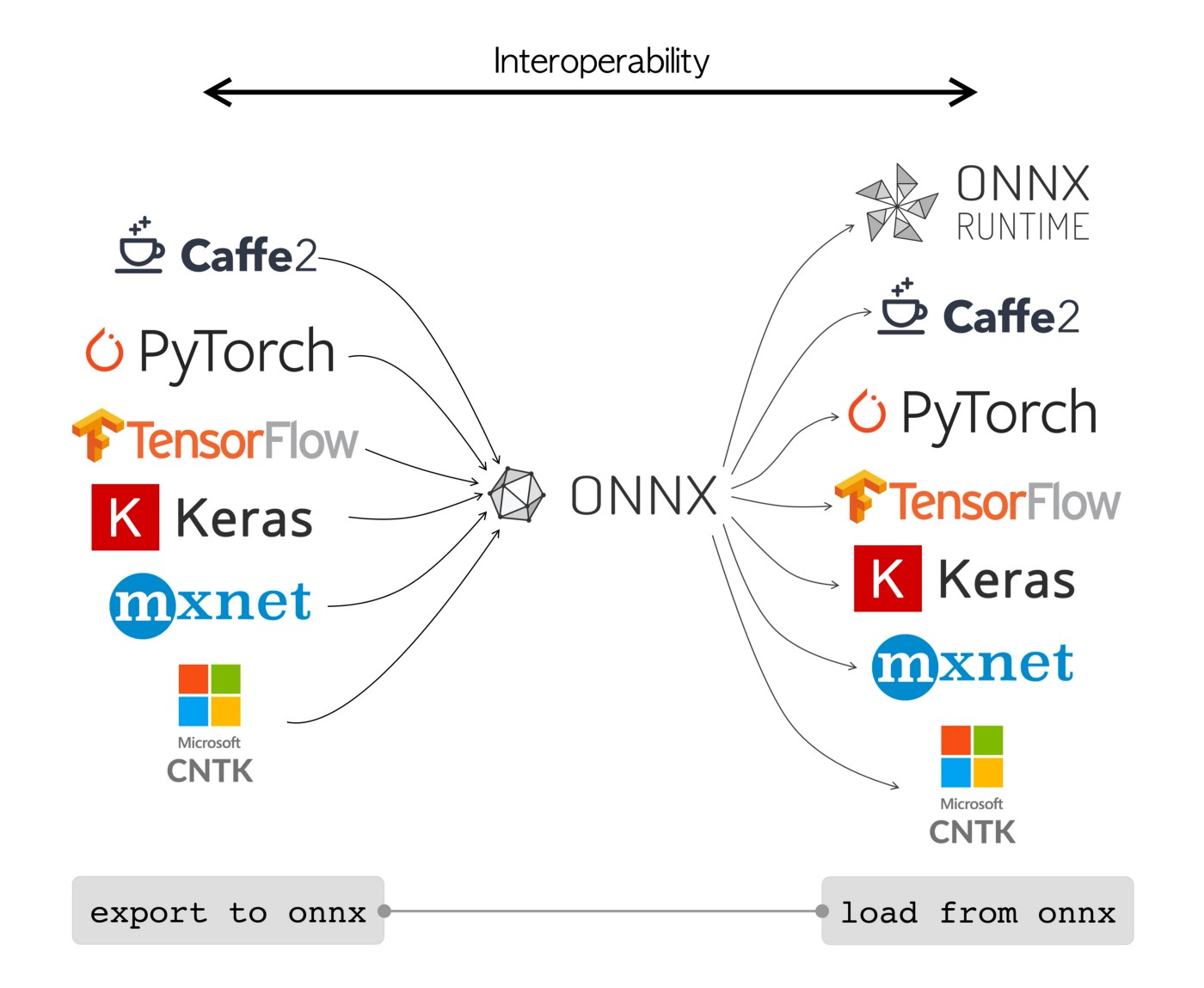

ONNX (Open Neural Network Exchange) is an open-source format designed to represent machine learning models. It aims to provide a standard way to describe deep learning models and enable interoperability between different frameworks. In this article, we’ll delve into the world of ONNX, exploring its core concepts, structure, and how it represents computation graphs.

Understanding ONNX

ONNX serves as a common framework to represent deep learning models, making it easier to move models between different frameworks and tools. It defines an extensible computation graph model, which can represent a wide range of neural network architectures.

Core Concepts of ONNX

-

Nodes: Nodes represent operations in the computation graph, such as matrix multiplication, convolution, activation functions, etc. Each node has inputs and outputs, connecting them to form the computation graph.

-

Tensors: Tensors are multi-dimensional arrays used to store data in deep learning models. They flow through the computation graph, carrying information between nodes.

-

Graphs: A graph in ONNX is a collection of nodes that define the computation flow of a model. It consists of input and output nodes, representing the model’s inputs and outputs.

-

Models: An ONNX model is a collection of graphs, representing a complete neural network model. It includes the model’s structure, parameters, and metadata.

Structure of an ONNX Model

An ONNX model consists of the following components:

-

Model Metadata: Information about the model, such as its name, version, and description.

-

Graph: The computation graph that defines the model’s architecture and operations.

-

Inputs: The model’s input nodes, specifying the input data format and shape.

-

Outputs: The model’s output nodes, indicating the format and shape of the output data.

-

Initializers: Constant values used in the model, such as weights and biases.

-

Parameters: Additional information about the model, such as optimization settings and target device.

Example of an ONNX Model

Here’s an example of an ONNX model represented as a JSON file:

1

2

3

4

5

6

7

8

9

10

11

12

13

{

"model": {

"name": "MyONNXModel",

"graph": {

"nodes": [

{

"op": "Conv",

"inputs": ["data", "weights"],

"outputs": ["conv_output"]

},

{

"op": "Relu",

Open Neural Network Exchange (ONNX) is a pivotal open standard for representing machine learning models that has been widely adopted in the AI community. It allows for models to be moved seamlessly between various machine learning and deep learning frameworks, thus facilitating a more collaborative and flexible approach to model development and deployment.

The Computation Graph in ONNX

At the heart of an ONNX model lies the computation graph. This graph is a detailed representation of the operations (or nodes) that a neural network performs and the data (or tensors) that pass through these operations. The computation graph is directed and acyclic, meaning that it flows in one direction (from input to output) without any loops.

-

Nodes Nodes in the computation graph represent operations, such as mathematical computations, layer transformations, or activation functions. Each node takes one or more tensors as input and produces one or more tensors as output.

-

Tensors Tensors are the data structures that hold the numerical data. They can be thought of as multi-dimensional arrays. In the context of the computation graph, tensors flow between nodes, carrying data from one operation to the next.

-

Initializers Initializers are special tensors that provide the initial values for the model’s parameters, typically weights and biases. These are static and do not change during the model’s execution, unlike the tensors that represent variable data flowing through the graph.

ONNX Model Structure

An ONNX model encapsulates the computation graph and includes additional metadata that describes the model. The structure of an ONNX model can be broken down into the following components:

Graph: The core component that contains the nodes, tensors, and initializers. Model Metadata: Information about the model, such as the ONNX version used, the domain, and the model’s producer. Opset: Defines the set of operators (and their versions) used in the model. This is important for ensuring compatibility with ONNX runtimes. Inputs and Outputs: The model’s input and output specifications, including names and data types. The ONNX model is serialized into a Protocol Buffers (protobuf) binary file, which is a compact, cross-platform format.

Visualizing an ONNX Model

Visualizing an ONNX model’s computation graph can be incredibly insightful for understanding its architecture and for debugging purposes. Tools like Netron offer a graphical interface to explore the model’s structure.

When you open an ONNX model in Netron, you will see:

The nodes of the graph, each representing an operation, with their corresponding inputs and outputs. The flow of tensors through the graph, illustrating how data moves from one operation to the next. The initializers, which are typically connected to the nodes that represent the network’s layers. Example: Visualizing a Computation Graph Let’s consider a simple neural network model that has been exported to ONNX format. When visualized using Netron, the computation graph might look something like this:

Input Node: The starting point of the graph, where input data is fed into the model. Convolution Node: Represents a convolutional operation, which might be connected to the input node. Activation Node: An activation function, such as ReLU, connected to the convolution node. Fully Connected Node: A dense layer that is connected to the activation node. Output Node: The final node that outputs the prediction of the neural network. Each node is depicted as a block, and arrows indicate the flow of tensors from one node to the next. Initializers, which provide the weights for the convolution and fully connected nodes, are also shown, often as separate blocks linked to their respective nodes.

Conclusion

ONNX’s standardized format for machine learning models, centered around the computation graph, provides a clear and efficient way to represent complex neural networks. By visualizing these models, developers can gain a deeper understanding of the model’s operation sequence and data flow, which is crucial for optimization and troubleshooting. As the adoption of ONNX continues to grow, its role in ensuring interoperability and accelerating innovation in the AI field becomes increasingly significant.